In what follows I'm going to talk about Artificial Intelligence (AI), Machine Learning (ML) and Artificial Neural Networks (ANN's). More to the point, "What are they good for?", in practical terms. If you are wondering what you can use them for the answer is, "most everything". From light to radio, from radio to sound, from graphics to games, from design to medicine, the list goes on. Here are some working concepts.

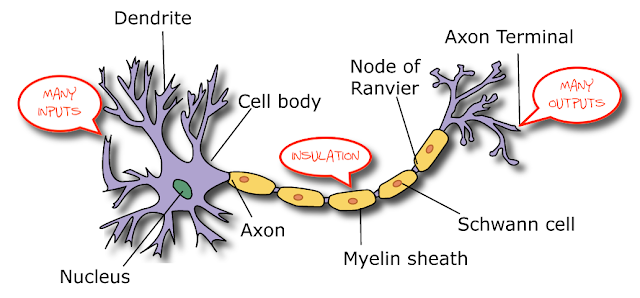

Artificial Neurons (ANN's) are an abstraction of Biological Neurons. The first thing we notice is that biological neurons use "many inputs to many outputs" connectivity. So in a mathematical sense they are not classic functions, because functions only have one output.

| |

|

Artificial neurons have a "many inputs to one output" connectivity. So they are functions. Functions can have many inputs, provided they only have one output.

|

| Artificial Neuron aka 'Perceptron' |

|

| Neural Network with Four Layers |

Another thing we notice about the Artificial Neuron is that the magnitude of the output of any given neuron is clamped to some maximum value. So if you are in space staring at the sun, your brain doesn't fry because of the neural output, it fries because you standing next to the sun. How peaceful.

I would be remiss here if I didn't mention that until recently, programming languages implemented the concept of functions in a similar way, many inputs were allowed but only one output was returned per function call.

Python, which has become the de facto language of AI allows one to return many outputs from a procedure call, thus implementing many to many relations. This is extremely convenient, amplifying the expressive power of the language considerably.

There are many details one must attend in programming neural nets. These include the number of layers, the interconnection topology, the learning rate and the activation function - that include S-shaped sigmoid function shown above at the tail of the Artificial Neuron. Activation functions and come in many flavors. There are also cost or loss functions that enable us to evaluate how well a neuron is performing for the weights of each of its inputs. These cost functions come in linear, quadratic and logarithmic forms, the latter of which has the mystical name "Cross Entropy". Remember if you want to know more about something you can always google it.

Great strides have been made in neural networks by adjusting the input weights using "Gradient Descent" algorithms which attempt to find optimal combinations of input weights that maximize the effectiveness of each neuron. A neuron has many inputs to consider - many things shouting at it simultaneously - and its job is to figure out who to listen to, who to ignore and by how much. These inspirational and optimal values are "Back Propagated" using the Chain Rule from our dear friend Calculus. This is repeated until the ensemble of neurons as a whole are functioning their best as a group. The act of getting this to happen is called "Training the Neural Network". You can think of it as taking the Neural Network to school. So the bad news is, robots in the future will have to go to school. The good news is that once a single robot is trained a whole fleet can be trained for the cost of a download. This is wonderful and scary but I digress.

TensorFlow Playground

Before we go any further, you must visit TensorFlow Playground. It is a magical place and you will learn more in ten minutes spent there than doing almost anything else. If you feel uneasy do what I do. Just start pushing buttons willy nilly until things start making sense. You will be surprised how fast they do because your neurons are learning about their neurons and its peachy keen.

|

| TensorFlow Playground |

CNN - Convolutional Neural Networks

Convolutional Neural Networks are stacks of neurons that can classify spatial features in images. They are useful for recognition problems, such as handwriting recognition and translation.

|

| Typical CNN |

|

| CIFAR Database |

|

| MNIST Training and Recognition |

|

| Deep Dream Generator |

Just as Convolutional Neural Networks can be used to process and recognize images in novel ways, Recurrent Neural Networks can be used to process signals that vary over time. This can be used to predict prices, crop production, or even make music. Recurrent Neural Networks use feedback and connect their outputs into their inputs in that deeply cosmic Jimi Hendrix sort of way. They can be unwound in time and when this is done they take on the appearance of a digital filter.

|

| Unwinding an RNN in Time |

|

| Predicting Periodic Functions with an RNN |

AE - AutoEncoders

Autoencoders are a unique topology in the world of CNN's because they have as many output layers as input layers. They are useful for unsupervised learning. They are designed to reproduce their input at the output layer. They can be used for principal component analysis (PCA) and dimensionality reduction, a form of data compression.

RL - Reinforcement Learning

With Reinforcement Learning a neural net is trained subject to conditions of rewards, both positive and negative until the desired behavior is encoded in the neural net. Training can take a long time, but this technique is very useful for training robots to do adaptive tasks like walking and obstacle avoidance. This style of machine learning is one of the most intuitive and easiest to connect to.

|

| Components of a Reinforcement Learning System |

GANS - Generative Adversarial Networks

GANS are useful for unsupervised learning, an echelon above routine categorization tasks. They typically have two parts, a Generator and a Discriminator. The Generator creates an output, often an image, and the Discriminator decides if the image is plausible according to its training. In the MNIST example below the gist of the program is, "Draw something that looks like a number". In an interesting limitation, the program does not know the value of the number, but simply that the image looks like a number. Of course it would be a quick trip to the trained CNN to get the number recognized.

|

| GAN Instructed to "Draw Something That Looks Like A Number" |

Conclusion

This short note details several approaches to and application of machine learning. Hope you found it interesting. For more information just follow the links above.

No comments:

Post a Comment